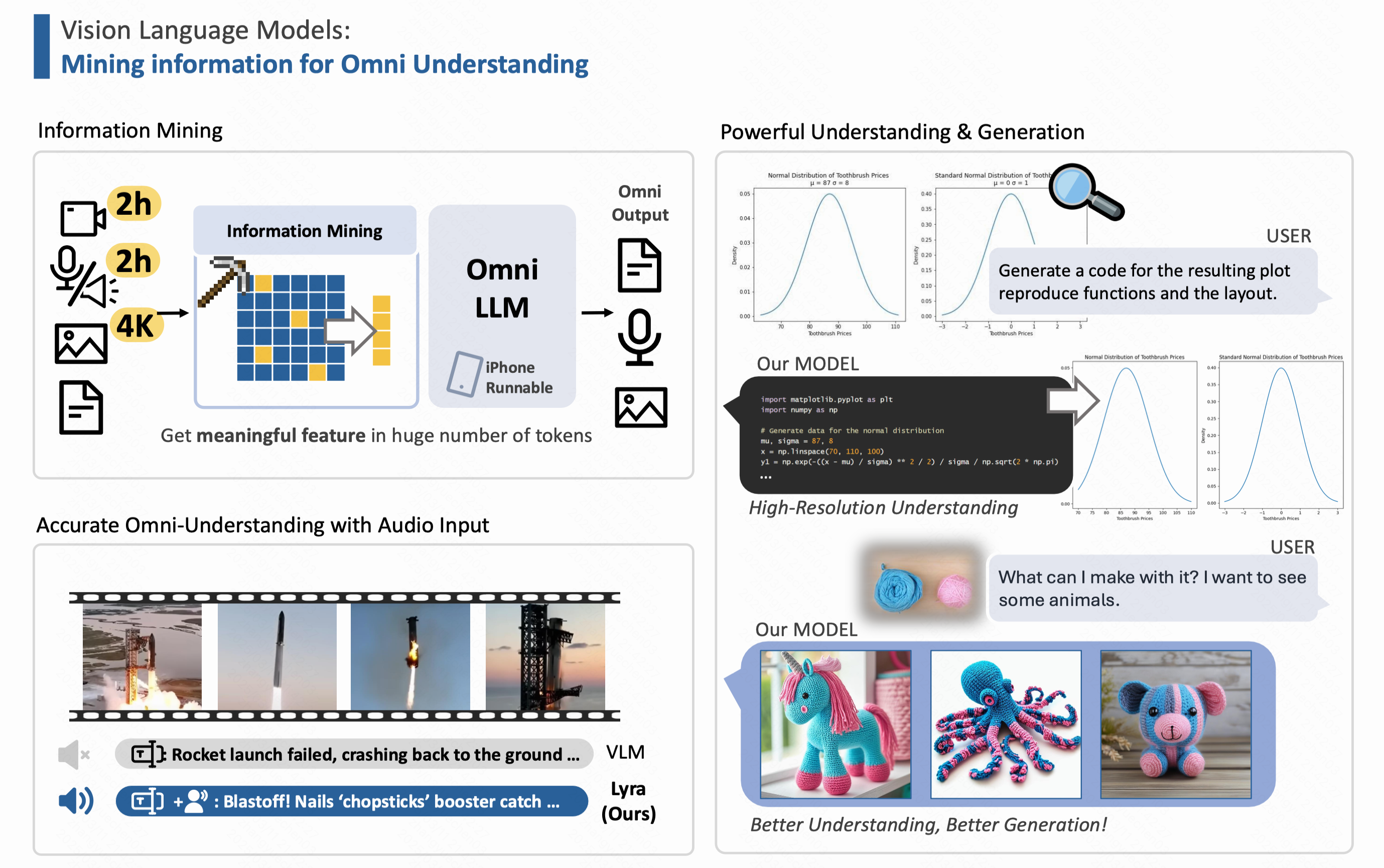

The Large Omni-Multimodal Language Model Team is dedicated to developing next-generation AI systems capable of seamlessly processing and generating across multiple modalities. Our state-of-the-art models integrate diverse input modalities including images, audio, text, and video within a unified architecture, facilitating comprehensive multimodal understanding and generation.

Our pioneering multimodal large language models enable arbitrary-length processing of various input modalities while supporting versatile output generation spanning text, images, and audio. This unified approach eliminates the need for separate specialized systems, creating a truly omni-modal AI capable of intelligent cross-modal transformations and interpretations.

The team continues to push boundaries in multimodal fusion techniques, context-aware representation learning, and efficient processing of multi-stream information, with applications extending to creative content generation, assistive technologies, and enhanced human-computer interaction systems.